A can be represented as an array of size 15: [1,1,1,1,-1,1,1,1,1,1,-1,1,1,-1,1].

Philippe Kunzle

ID: *****9866

FILES:

The Neural Network Artificial Intelligence comes from how brains

work. Our brain is composed of 1011 neuron cells (H

Neural networks comes in different flavors: back probagation

network, competitive network , Grossberg network, just to mention

a few. But the one we are going to look at is the Auto Associative Network.

We can teach an auto associative network to recognize some pattern. Once trained,

we can give a partially erased pattern or a noisy pattern and the network will

be able to guess what it is supposed to be

In this project we are using the causal block diagram formalism to build an auto associative neural network. There will be two parts: training the neural network to recognize two different patterns and running the network in order to regognize the patterns.

Let's first understand a little bit better what an auto associative network do. For example, if we train the network to recognize the letter A and B:

A can be represented as an array of size 15: [1,1,1,1,-1,1,1,1,1,1,-1,1,1,-1,1].

Similarly, B can be: [1,1,-1,1,-1,1,1,1,-1,1,-1,1,1,1,-1]

And we could go on with many more shapes or letter.

Once the network is trained, we can enter a partially erased A:

Or noisy A:

and the network should return:

The neural network could be trained to recognize more patterns and would work just as well.

But training a 15 input neural network would require an array of weights of 15x15. This would make a huge causal block diagram. So in order to make it simple enough so that the diagram isn't a big mess and so that we can still see the properties of such a neural network, we will have a 4 input neural network representing a 4 pixel image. The neural network will be trained to recognize 2 patterns:

![]() and

and ![]() .

.

We now need to compute the weights of the neural network. The formula given

by the book "Neural Network Design" is as follows:

![]()

where W is the matrix of weights, p1 is the pattern #1 (![]() )

and p2 is the pattern #2 (

)

and p2 is the pattern #2 (![]() ) that

we want our neural network to recognize. In our example, p1 and p2 are 4D vectors.

) that

we want our neural network to recognize. In our example, p1 and p2 are 4D vectors.

The computation of each weight in the matrix is acctually pretty simple:

![]()

w[i,j], p1[i] and 2[i] are all scalar number.

We see that because we are multiplying each vector by itself that W[1,2] = W[2,1].

The array of weights is acctually symetrical, so we only need to compute 10

weights instead of 16.

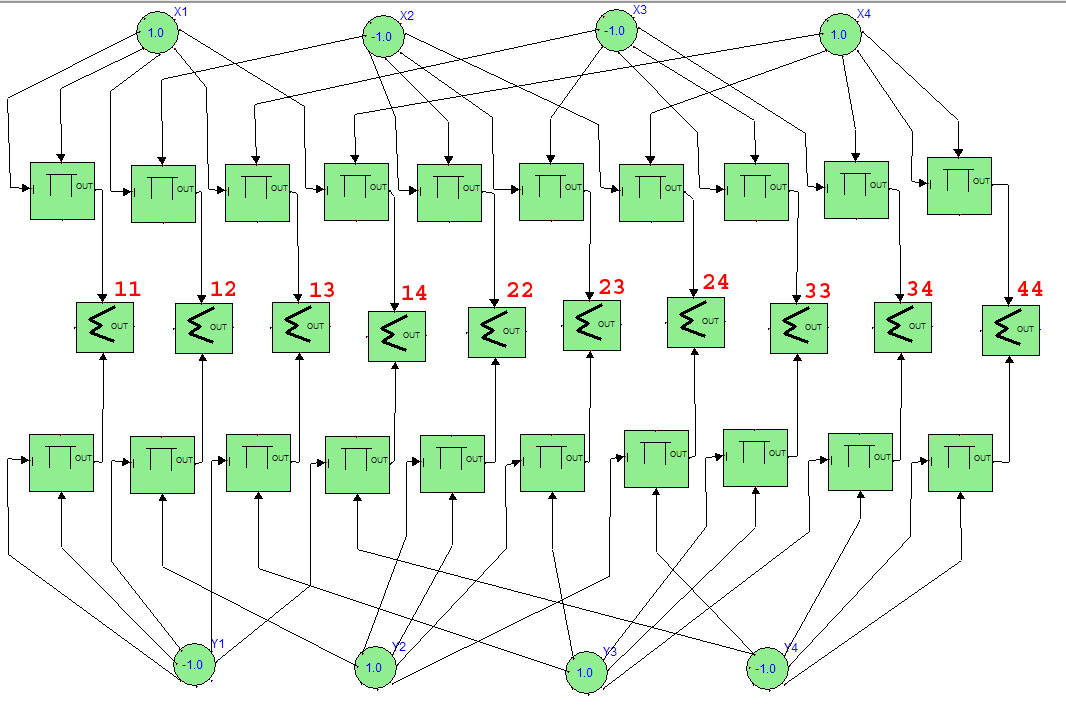

Here is the causal block diagram that shows the computation of the weights:

the patterns are x1,x2,x3,x4 and y1,y2,y3,y4 and the weights of the matrix are

marked in red.

the different weights would be placed in the array as follows:

W=

| 11 | 12 | 13 | 14 |

| 12 | 22 | 23 | 24 |

| 13 | 23 | 33 | 34 |

| 14 | 24 | 34 | 44 |

Once we computed the values, we get the following matrix of weights:

2 |

-2 |

-2 |

2 |

-2 |

2 |

2 |

-2 |

-2 |

2 |

2 |

-2 |

2 |

-2 |

-2 |

2 |

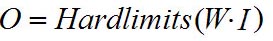

We can now use our trained neural network to recognize patterns.

where W is the matrix we just computed and I is a 4D vector representing a 4 pixel image we want to recognize.HardLimits means that if the value entered in the function HardLimits is larger than 0, we return 1, if the value is smaller than 0 we return -1.

In this CBD, the constants named 11,12,... are the different weights

of our neural network. I1,I2,I3 and I4 are the input image. In this case, we

are entering [1,1,1,-1] which represents this image: ![]() .

.

O1,O2,O3,O4 is the ouput of the neural network. Hopfully a recognized pattern.

The output of this neural network is

-1 |

1 |

1 |

-1 |

which represents the following pattern: ![]() .

.

Often, books only gives a formulas that won't let us see what is going on, and this is a perfect example, the formulas to run the neural network seems very simple. It is only a multiplication of an array by a vector. The Causal Block Diagram formalism let us have better view of what the neural network does. Training 2 pattern is already many computation, but running the trained network is even more complex. And this is only for a 4 input neural network.

As desired, when entering a pattern that doesnt' exists such as

![]() , the neural network tries to find

the closest pattern from what it learned and returns it:

, the neural network tries to find

the closest pattern from what it learned and returns it: ![]()

Neural Network Design, Martin Hagan, Howard Demuth, Mark Beale, PWS Publishing Company, 1995.